How To Regist Gesture In Object-c

Update notation: Ryan Ackermann updated this tutorial for Xcode 11, Swift five and iOS thirteen. Caroline Begbie and Brody Eller wrote earlier updates and Ray Wenderlich wrote the original.

In iOS, gestures like taps, pinches, pans or rotations are used for user input. In your app, you can react to gestures, like a tap on push, without ever thinking well-nigh how to observe them. Merely in case you want to use gestures on views that don't back up them, y'all can easily practise it with the born UIGestureRecognizer classes.

In this tutorial, you'll learn how to add gesture recognizers to your app, both inside the storyboard editor in Xcode and programmatically.

You'll practice this past creating an app where yous tin move a monkey and a banana around by dragging, pinching and rotating with the assist of gesture recognizers.

You'll also try out some cool extra features like:

- Adding deceleration for movement.

- Setting dependencies between gesture recognizers.

- Creating a custom

UIGestureRecognizerso you can tickle the monkey.

This tutorial assumes you're familiar with the basic concepts of storyboards. If yous're new to them, you may wish to check out our storyboard tutorials first.

The monkey just gave you the thumbs-upwards gesture, so it's time to get started! :]

Getting Started

To get started, click the Download Materials button at the height or bottom of this tutorial. Inside the zip file, y'all'll find two folders: brainstorm and terminate.

Open the begin folder in Xcode, and so build and run the projection.

You should see the post-obit in your device or simulator:

UIGestureRecognizer Overview

Before y'all get started, here'south a cursory overview of why UIGestureRecognizerdue south are and then handy and how to use them.

Detecting gestures required a lot more work before UIGestureRecognizerdue south were available. If you wanted to detect a swipe, for instance, you had to register for notifications — similar touchesBegan, touchesMoved and touchesEnded — on every bear upon in a UIView. This created subtle bugs and inconsistencies across apps because each programmer wrote slightly different code to notice those touches.

In iOS 3.0, Apple tree came to the rescue with UIGestureRecognizer classes. These provide a default implementation to observe common gestures like taps, pinches, rotations, swipes, pans and long presses. Using them not merely saves a ton of lawmaking, just it also makes your apps work properly. Of grade, yous can even so utilise the old touch notifications if your app requires them.

To apply UIGestureRecognizer, perform the following steps:

- Create a gesture recognizer: When you create a gesture recognizer, yous specify a target and activeness and so the gesture recognizer tin send yous updates when the gesture starts, changes or ends.

- Add the gesture recognizer to a view: Y'all associate each gesture recognizer with one, and just one, view. When a touch occurs within the premises of that view, the gesture recognizer will check if information technology matches the type of touch information technology's looking for. If it finds a match, it notifies the target.

Yous can perform these two steps programmatically, which yous'll do afterwards in this tutorial. But it'due south fifty-fifty easier to do with the storyboard editor, which you'll use next.

Using the UIPanGestureRecognizer

Open up Main.storyboard. Click the Plus button at the top to open the Library.

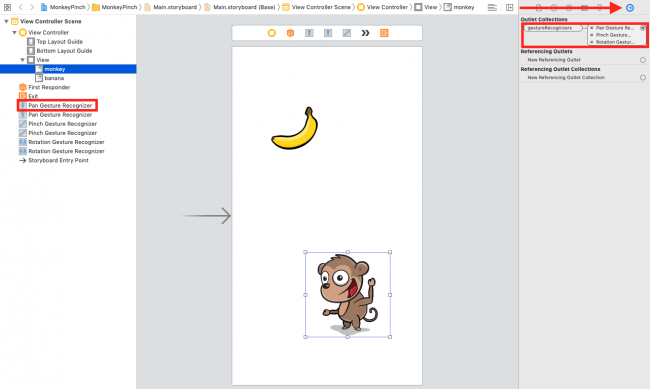

Within the Library console, look for the pan gesture recognizer object and drag it onto the monkey image view. This creates both the pan gesture recognizer and its association with the monkey image view:

You can verify the connection by clicking on the monkey image view, looking at the Connections inspector in View > Inspectors > Bear witness Connections Inspector, and making sure the pan gesture recognizer is in the gestureRecognizers's Outlet Collection.

The begin projection connected two other gesture recognizers for yous: the Pinch Gesture Recognizer and Rotation Gesture Recognizer. It as well connected the pan, pinch and rotation gesture recognizers to the assistant image view.

Then why did yous associate the UIGestureRecognizer with the image view instead of the view itself?

Y'all could connect it to the view if that makes the most sense for your projection. But since you tied it to the monkey, you know that any touches are within the premises of the monkey. If this is what yous want, you're good to go.

If you want to notice touches across the bounds of the epitome, you'll need to add the gesture recognizer to the view itself. But note that you'll need to write additional lawmaking to bank check if the user is touching inside the bounds of the epitome itself and to react accordingly.

Now that you've created the pan gesture recognizer and associated it with the image view, you have to write the activity so something actually happens when the pan occurs.

Implementing the Panning Gesture

Open ViewController.swift and add the following method correct below viewDidLoad(), within the ViewController:

@IBAction func handlePan(_ gesture: UIPanGestureRecognizer) { // i let translation = gesture.translation(in: view) // 2 baby-sit let gestureView = gesture.view else { return } gestureView.center = CGPoint( x: gestureView.center.x + translation.10, y: gestureView.center.y + translation.y ) // 3 gesture.setTranslation(.nix, in: view) } The UIPanGestureRecognizer calls this method when it first detects a pan gesture, then continuously as the user continues to pan and one last time when the pan completes — usually when the user'due south finger lifts.

Here's what'southward going on in this code:

- The

UIPanGestureRecognizerpasses itself every bit an statement to this method. You tin recall the amount the user'due south finger moved past callingtranslation(in:). You and then employ that corporeality to move the center of the monkey the same distance. - Note that instead of difficult-coding the monkey prototype view into this method, you lot get a reference to the monkey epitome view by calling

gesture.view. This makes your lawmaking more than generic so that y'all can re-use this same routine for the banana epitome view after. - It's important to set up the translation back to nil in one case you finish. Otherwise, the translation volition keep compounding each time and you'll run into your monkey motility speedily off the screen!

Now that this method is complete, you'll claw it upwards to the UIPanGestureRecognizer.

Connecting the Panning Gesture to the Recognizer

In the document outline for Main.storyboard, control-drag from the monkey'south pan gesture recognizer to the view controller. Select handlePan: from the popular-upward.

At this point your Connections inspector for the pan gesture recognizer should await like this:

Build and run and try to drag the monkey. It doesn't piece of work?! This is because Xcode disables touches by default on views that unremarkably don't accept touches — like image views.

Letting the Image Views Accept Touches

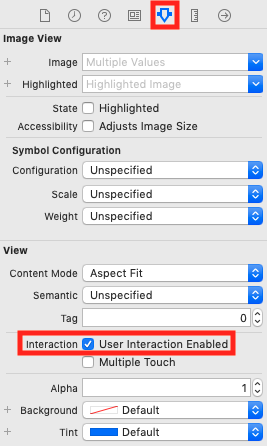

Ready this past selecting both image views, opening the Attributes inspector and checking User Interaction Enabled.

Build and run again. This time, you can drag the monkey around the screen!

Discover that y'all still can't drag the banana because y'all need to connect its own pan gesture recognizer to handlePan(_:). You'll exercise that now.

- Control-drag from the banana pan gesture recognizer to the view controller and select handlePan:.

- Double-check to make certain you've checked User Interaction Enabled on the banana every bit well.

Build and run. You tin now elevate both image views across the screen. It'south pretty piece of cake to implement such a cool and fun effect, eh?

Adding Deceleration to the Images

Apple apps and controls typically have a flake of deceleration before an animation finishes. You run into this when scrolling a spider web view, for example. Yous'll often want to use this blazon of behavior in your apps.

In that location are many ways of doing this. The approach you'll use for this tutorial produces a nice effect without much effort. Hither's what you'll exercise:

- Detect when the gesture ends.

- Calculate the speed of the touch.

- Breathing the object moving to a final destination based on the touch on speed.

And here's how you'll attain those goals:

- To detect when the gesture ends: Multiple calls to the gesture recognizer'south callback occur every bit the gesture recognizer's state changes. Examples of those states are:

began,changedorended. You lot tin find the current state of a gesture recognizer past looking at itsstateproperty. - To observe the touch velocity: Some gesture recognizers return additional information. For case,

UIPanGestureRecognizerhas a handy method calledvelocity(in:)that returns, you guessed it, the velocity!

Note: Yous can view a full list of the methods for each gesture recognizer in the API guide.

Easing Out Your Animations

Start by adding the following to the bottom of handlePan(_:) in ViewController.swift:

baby-sit gesture.land == .ended else { return } // one allow velocity = gesture.velocity(in: view) let magnitude = sqrt((velocity.10 * velocity.x) + (velocity.y * velocity.y)) let slideMultiplier = magnitude / 200 // two let slideFactor = 0.1 * slideMultiplier // 3 var finalPoint = CGPoint( 10: gestureView.center.x + (velocity.x * slideFactor), y: gestureView.center.y + (velocity.y * slideFactor) ) // 4 finalPoint.x = min(max(finalPoint.x, 0), view.bounds.width) finalPoint.y = min(max(finalPoint.y, 0), view.bounds.height) // 5 UIView.animate( withDuration: Double(slideFactor * two), delay: 0, // half dozen options: .curveEaseOut, animations: { gestureView.center = finalPoint }) This simple deceleration equation uses the following strategy:

- Calculates the length of the velocity vector (i.e. the magnitude).

- Decreases the speed if the length is < 200. Otherwise, it increases it.

- Calculates a final point based on the velocity and the slideFactor.

- Makes certain the final indicate is within the view's bounds

- Animates the view to the final resting place.

- Uses the ease out animation option to dull the movement over time.

Build and run to try it out. You should now take some basic but nice deceleration! Experience costless to play effectually with it and improve it. If yous come up upward with a better implementation, please share it in the forum discussion at the stop of this article.

Compression and Rotation Gestures

Your app is coming forth great and then far, merely it would be even cooler if y'all could scale and rotate the image views past using pinch and rotation gestures also!

The begin project gives y'all a great start. It created handlePinch(_:) and handleRotate(_:) for you. Information technology besides connected those methods to the monkey image view and the banana image view. Now, you'll complete the implementation.

Implementing the Pinch and Rotation Gestures

Open ViewController.swift. Add together the post-obit to handlePinch(_:):

guard let gestureView = gesture.view else { return } gestureView.transform = gestureView.transform.scaledBy( x: gesture.scale, y: gesture.calibration ) gesture.scale = 1 Side by side add together the following to handleRotate(_:):

baby-sit permit gestureView = gesture.view else { return } gestureView.transform = gestureView.transform.rotated( by: gesture.rotation ) gesture.rotation = 0 Just like you got the translation from the UIPanGestureRecognizer, you get the calibration and rotation from the UIPinchGestureRecognizer and UIRotationGestureRecognizer.

Every view has a transform applied to it, which gives information on the rotation, calibration and translation that the view should have. Apple has many built-in methods to brand working with a transform easier. These include CGAffineTransform.scaledBy(ten:y:) to scale a given transform and CGAffineTransform.rotated(by:) to rotate a given transform.

Here, you use these methods to update the view's transform based on the user's gestures.

Once again, since you lot're updating the view each time the gesture updates, information technology'south very important to set the scale and rotation back to the default state so y'all don't have craziness going on.

Now, hook these methods up in the storyboard editor. Open up Main.storyboard and perform the following steps:

- As you did previously, connect the ii pinch gesture recognizers to the view controller'south handlePinch:.

- Connect the two rotation gesture recognizers to the view controller's handleRotate:.

Your view controller connections should now look similar this:

Build and run on a device, if possible, considering pinches and rotations are hard to do on the simulator.

If you are running on the simulator, concord down the Choice key and drag to simulate two fingers. And so hold downwardly Shift and Option at the same time to move the simulated fingers together to a different position.

You can now calibration and rotate the monkey and the banana!

Simultaneous Gesture Recognizers

Yous may notice that if you put one finger on the monkey and one on the banana, y'all tin can elevate them around at the aforementioned time. Kinda cool, eh?

Notwithstanding, y'all'll notice that if you try to drag the monkey around and in the middle of dragging, bring down a second finger to compression to zoom, it doesn't work. By default, once i gesture recognizer on a view "claims" the gesture, other gesture recognizers can't have over.

However, you can modify this by overriding a method in the UIGestureRecognizer delegate.

Allowing Two Gestures to Happen at Once

Open ViewController.swift. Below the ViewController, create a ViewController course extension and conform it to UIGestureRecognizerDelegate:

extension ViewController: UIGestureRecognizerDelegate { } Then, implement ane of the delegate's optional methods:

func gestureRecognizer( _ gestureRecognizer: UIGestureRecognizer, shouldRecognizeSimultaneouslyWith otherGestureRecognizer: UIGestureRecognizer ) -> Bool { return true } This method tells the gesture recognizer whether it'due south OK to recognize a gesture if another recognizer has already detected a gesture. The default implementation always returns false, simply y'all've switched it to always return truthful.

Next, open Principal.storyboard and connect each gesture recognizer'southward delegate outlet to the view controller. You'll connect six gesture recognizers in full.

Build and run again. Now, you tin drag the monkey, pinch to scale it and continue dragging afterward! You can even scale and rotate at the same time in a natural style. This makes for a much nicer feel for the user.

Programmatic UIGestureRecognizers

So far, yous've created gesture recognizers with the storyboard editor, but what if you wanted to practice things programmatically?

Well, why not endeavour it out? Y'all'll do so by adding a tap gesture recognizer to play a sound effect when y'all tap either of the image views.

To play a sound, yous'll demand to access AVFoundation. At the height of ViewController.swift, add:

import AVFoundation Add the following changes to ViewController.swift, simply earlier viewDidLoad():

private var chompPlayer: AVAudioPlayer? func createPlayer(from filename: String) -> AVAudioPlayer? { guard permit url = Packet.main.url( forResource: filename, withExtension: "caf" ) else { render nix } var thespian = AVAudioPlayer() practise { endeavour player = AVAudioPlayer(contentsOf: url) player.prepareToPlay() } take hold of { impress("Error loading \(url.absoluteString): \(mistake)") } return player } Add the post-obit code at the end of viewDidLoad():

// 1 let imageViews = view.subviews.filter { $0 is UIImageView } // 2 for imageView in imageViews { // iii permit tapGesture = UITapGestureRecognizer( target: self, activity: #selector(handleTap) ) // 4 tapGesture.delegate = self imageView.addGestureRecognizer(tapGesture) // TODO: Add together a custom gesture recognizer too } chompPlayer = createPlayer(from: "chomp") The begin projection contains handleTap(_:). Add the following inside of this method:

chompPlayer?.play() The audio playing code is outside the telescopic of this tutorial, simply if you want to learn more check out our AVFoundation Course. The important office is in viewDidLoad():

- Create an array of paradigm views — in this example, the monkey and banana.

- Bicycle through the array.

- Create a

UITapGestureRecognizerfor each paradigm view, specifying the callback. This is an alternate fashion of calculation gesture recognizers. Previously, you added the recognizers to the storyboard. - Set the delegate of the recognizer programmatically and add the recognizer to the prototype view.

That's it! Build and run. You can now tap the image views for a sound effect!

Setting UIGestureRecognizer Dependencies

Everything works pretty well, except for ane pocket-size annoyance. Dragging an object very slightly causes it to both pan and play the audio effect. You really want the sound upshot to play simply when you tap an object, not when you pan it.

To solve this, y'all could remove or modify the consul callback to bear differently when a bear upon and compression coincide. Only at that place's another approach you tin can use with gesture recognizers: setting dependencies.

You can call a method called require(toFail:) on a gesture recognizer. Can you lot guess what information technology does? ;]

Open Main.storyboard and another editor on the right by clicking the button on the top-right of the storyboard panel.

On the left of the new console that simply opened, click the button with four squares. Finally, select the third item from the list, Automatic, which will ensure that ViewController.swift shows there.

Now Command-elevate from the monkey pan gesture recognizer to beneath the class declaration and connect it to an outlet named monkeyPan. Repeat this for the banana pan gesture recognizer, but proper name the outlet bananaPan.

Make sure you lot add the right names to the recognizers to foreclose mixing them up! Y'all can check this in the Connections inspector.

Next, add together these 2 lines to viewDidLoad(), right earlier the TODO:

tapGesture.require(toFail: monkeyPan) tapGesture.crave(toFail: bananaPan) At present, the app will only call the tap gesture recognizer if it doesn't detect a pan. Pretty cool, eh?

Creating Custom UIGestureRecognizers

At this signal, you know pretty much everything you demand to know to employ the congenital-in gesture recognizers in your apps. But what if you desire to discover some kind of gesture that the built-in recognizers don't support?

Well, y'all can ever write your own! For example, what if you lot wanted to notice a "tickle" when the user rapidly moves the object left and right several times? Ready to practice this?

"Tickling" the Monkey

Create a new file via File ▸ New ▸ File… and option the iOS ▸ Source ▸ Swift File template. Proper name the file TickleGestureRecognizer.

Then replace the import statement in TickleGestureRecognizer.swift with the following:

import UIKit course TickleGestureRecognizer: UIGestureRecognizer { // one individual let requiredTickles = 2 individual let distanceForTickleGesture: CGFloat = 25 // ii enum TickleDirection { case unknown case left case correct } // 3 private var tickleCount = 0 individual var tickleStartLocation: CGPoint = .zero individual var latestDirection: TickleDirection = .unknown } Here'southward what you simply declared, step-by-step:

- The constants that define what the gesture will need. Note that yous infer

requiredTicklesas anInt, only you demand to specifydistanceForTickleGestureas aCGFloat. If yous don't, so the app will infer it equally anInt, which causes difficulties when calculating withCGPoints afterward. - The possible tickle directions.

- Three properties to go on track of this gesture, which are:

- tickleCount: How many times the user switches the direction of the gesture, while moving a minimum number of points. Once the user changes gesture management three times, y'all count information technology as a tickle gesture.

- tickleStartLocation: The point where the user started moving in this tickle. You'll update this each time the user switches direction, while moving a minimum number of points.

- latestDirection : The latest direction the finger moved, which starts equally unknown. After the user moves a minimum amount, you'll cheque whether the gesture went left or right and update this appropriately.

Of course, these properties are specific to the gesture you lot're detecting here. You'll create your own if you're making a recognizer for a different blazon of gesture.

Managing the Gesture's State

One of the things that you'll change is the state of the gesture. When a tickle completes, you'll change the land of the gesture to ended.

Switch to TickleGestureRecognizer.swift and add the following methods to the course:

override func reset() { tickleCount = 0 latestDirection = .unknown tickleStartLocation = .zero if land == .possible { state = .failed } } override func touchesBegan(_ touches: Fix<UITouch>, with event: UIEvent) { guard let touch on = touches.showtime else { render } tickleStartLocation = touch.location(in: view) } override func touchesMoved(_ touches: Set<UITouch>, with outcome: UIEvent) { guard let touch = touches.commencement else { render } let tickleLocation = bear on.location(in: view) let horizontalDifference = tickleLocation.x - tickleStartLocation.10 if abs(horizontalDifference) < distanceForTickleGesture { render } permit direction: TickleDirection if horizontalDifference < 0 { direction = .left } else { management = .correct } if latestDirection == .unknown || (latestDirection == .left && direction == .right) || (latestDirection == .right && management == .left) { tickleStartLocation = tickleLocation latestDirection = direction tickleCount += 1 if state == .possible && tickleCount > requiredTickles { state = .concluded } } } override func touchesEnded(_ touches: Fix<UITouch>, with event: UIEvent) { reset() } override func touchesCancelled(_ touches: Set<UITouch>, with consequence: UIEvent) { reset() } There's a lot of code here and you don't need to know the specifics for this tutorial.

To give you lot a general idea of how information technology works, you're overriding the UIGestureRecognizer's reset(), touchesBegan(_:with:), touchesMoved(_:with:), touchesEnded(_:with:) and touchesCancelled(_:with:) methods. And you're writing custom lawmaking to look at the touches and discover the gesture.

One time you've institute the gesture, you'll want to send updates to the callback method. You practice this by changing the land property of the gesture recognizer.

Once the gesture begins, y'all'll commonly set the state to .began. Afterward that, y'all'll send whatsoever updates with .inverse and finalize it with .ended.

For this simple gesture recognizer, once the user has tickled the object, that's it. You'll only marker it as .ended.

OK, at present to apply this new recognizer!

Implementing Your Custom Recognizer

Open ViewController.swift and brand the following changes.

Add together the following code to the top of the class, right after chompPlayer:

individual var laughPlayer: AVAudioPlayer? In viewDidLoad(), add the gesture recognizer to the image view by replacing the TODO:

permit tickleGesture = TickleGestureRecognizer( target: self, activity: #selector(handleTickle) ) tickleGesture.delegate = self imageView.addGestureRecognizer(tickleGesture) At end of viewDidLoad() add:

laughPlayer = createPlayer(from: "laugh") Finally, create a new method at the finish of the class to handle your tickle gesture:

@objc func handleTickle(_ gesture: TickleGestureRecognizer) { laughPlayer?.play() } Using this custom gesture recognizer is as simple every bit using the congenital-in ones!

Build and run. "Hehe, that tickles!"

Where to Go From Here?

Download the completed version of the project using the Download Materials push at the elevation or bottom of this tutorial.

Congrats, you lot're now a master of gesture recognizers — both built-in and custom ones! Touch interaction is such an important office of iOS devices and UIGestureRecognizer is the key to adding easy-to-utilise gestures beyond simple push button taps.

I hope y'all enjoyed this tutorial! If y'all take whatever questions or comments, please join the discussion below.

Source: https://www.raywenderlich.com/6747815-uigesturerecognizer-tutorial-getting-started

Posted by: mierascumbrues.blogspot.com

0 Response to "How To Regist Gesture In Object-c"

Post a Comment